Probability theory forms the cornerstone of machine learning, enabling data scientists and engineers to make informed decisions in the face of uncertainty. By understanding and mastering probability concepts, one can effectively model and analyze complex systems, extract meaningful insights from data, and build robust machine-learning algorithms. This article explores some of the fundamental probability concepts that are essential for anyone seeking to excel in the field of machine learning.

Probability Basics:

Probability basics lay the foundation for understanding probability theory. It involves concepts such as sample space, events, and probability axioms. The sample space is the set of all possible outcomes of a random experiment. An event is a subset of the sample space, representing a particular outcome or a combination of outcomes. Probability axioms, including the non-negativity, normalization, and additivity axioms, provide a formal framework for assigning probabilities to events. To forecast the variability of occurrence, you can take up free probability in a machine learning course for free.

Examples

Let’s consider the rolling of a fair six-sided die. The sample space consists of all possible outcomes: {1, 2, 3, 4, 5, 6}. Each outcome is equally likely, so the probability of rolling any specific number, say 3, is 1/6. The probability of an event can be calculated by dividing the number of favorable outcomes by the total number of outcomes. For example, the probability of rolling an odd number is 3/6 or 1/2.

Probability Distributions:

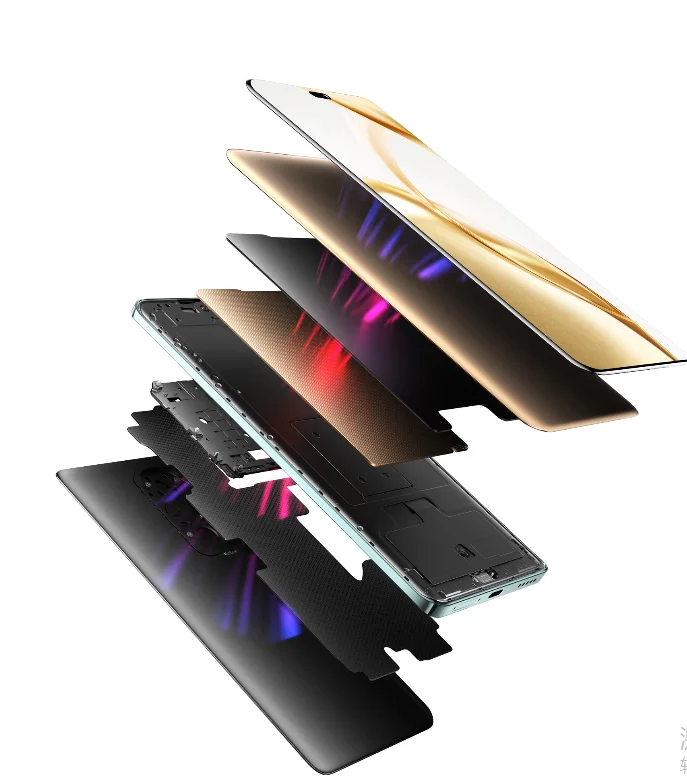

Probability distributions describe the probabilities of different outcomes in a random variable. A random variable is a variable that takes on different values based on the outcomes of a random experiment. Common probability distributions used in machine learning include the Gaussian (normal) distribution, which is symmetric and bell-shaped, making it suitable for modeling continuous variables. The Bernoulli distribution models binary events with two possible outcomes, while the multinomial distribution extends this to events with multiple discrete outcomes. Understanding probability distributions helps in accurately modeling real-world phenomena and selecting appropriate algorithms for specific tasks.

Examples

Suppose we have a dataset of students’ exam scores. We can model the distribution of scores using a Gaussian (normal) distribution. Let’s assume the scores follow a normal distribution with a mean of 70 and a standard deviation of 10. This distribution allows us to estimate the likelihood of observing a particular score. For instance, we can calculate the probability of a student scoring above 80 using the Gaussian distribution formula.

Conditional Probability:

Conditional probability measures the probability of an event occurring given that another event has already occurred. It is denoted as P(A|B), where A and B are events. Conditional probability is crucial in machine learning for capturing dependencies between variables. It allows us to update our beliefs based on new information. For example, in natural language processing, conditional probability is used in language modeling to predict the next word in a sentence based on the previous words.

Examples

Consider a deck of cards. If we draw one card at random, the probability of drawing a heart is 1/4. Now, let’s say we draw a card and it turns out to be a red card. The probability of drawing a heart given that the card is red is the conditional probability. Since half of the red cards are hearts, the conditional probability would be 1/2.

Bayes’ Theorem:

Bayes’ theorem provides a framework for updating beliefs or probabilities based on new evidence. It relates the conditional probability of an event A given event B to the conditional probability of event B given event A. Mathematically, it is expressed as P(A|B) = (P(B|A) * P(A)) / P(B). Bayes’ theorem is widely used in machine learning for tasks such as parameter estimation, model selection, and decision-making under uncertainty. Bayesian inference, which relies on Bayes’ theorem, allows us to update prior beliefs with observed data to obtain posterior probabilities.

Examples

Imagine a medical test for a rare disease. The test is 99% accurate, meaning it correctly identifies a positive case 99% of the time and correctly identifies a negative case 99% of the time. However, only 0.1% of the population actually has the disease. If a person tests positive, what is the probability that they actually have the disease? Bayes’ theorem can help us calculate this probability by incorporating the prior probability of having the disease and the accuracy of the test.

Markov Chains:

Markov chains are mathematical models that describe the probabilistic transitions between a sequence of states. The probability of transitioning from one state to another depends only on the current state and not on the previous states. Markov chains are widely used in machine learning for tasks such as natural language processing, where they can be used to model the probability of word sequences, and reinforcement learning, where they can model sequential decision-making processes. Understanding Markov chains and their properties helps in modeling dynamic systems and designing efficient algorithms.

Examples

Suppose we want to predict the weather (sunny, cloudy, or rainy) based on the previous day’s weather. We can represent this as a Markov chain. Let’s assume that the probability of transitioning from a sunny day to a cloudy day is 0.3 and the probability of transitioning from a cloudy day to a rainy day is 0.4. Given that today is sunny, we can use the Markov chain probabilities to estimate the likelihood of tomorrow being cloudy or rainy.

Random Variables and Expectations:

Random variables are variables that take on different values based on the outcomes of a random experiment. They can be discrete or continuous. Expectation, also known as the mean, is a measure of the central tendency of a random variable. It represents the average value of the random variable over all possible outcomes, weighted by their probabilities. Expectation is an important concept for quantifying uncertainty, evaluating machine learning models, and designing objective functions for optimization.

Examples

Consider a dataset of housing prices. We can define a random variable “X” to represent the price of a randomly chosen house from the dataset. The expectation or mean of this random variable, denoted as E(X), represents the average price of a house in the dataset. It provides a measure of central tendency and helps us understand the typical price range of houses in the dataset.

Monte Carlo Methods:

Monte Carlo methods are computational techniques that use random sampling to estimate numerical results. They are particularly useful when exact calculations are infeasible or impossible. Monte Carlo methods are widely employed in machine learning for tasks such as approximate inference, model evaluation, and optimization. By generating random samples from a probability distribution, Monte Carlo methods provide an approximation of the desired result. They offer powerful tools for tackling complex problems in machine learning and enable practitioners to make informed decisions based on sampled data.

Examples

Suppose we want to estimate the value of π using a Monte Carlo method. We can draw random points within a square and determine the proportion that falls within a quarter of a circle inscribed within the square. By multiplying this proportion by 4, we obtain an estimate of π. As we increase the number of random points, the estimate becomes more accurate. Monte Carlo methods allow us to approximate complex integrals, simulate outcomes, and solve optimization problems by generating random samples.

These examples illustrate how probability concepts are applied in various scenarios within machine learning. By understanding these concepts and applying them effectively, practitioners can make informed decisions, build accurate models, and extract valuable insights from data.

Role of Probability in Machine Learning

The role of probability in machine learning is crucial as it provides a framework for handling uncertainty and making informed decisions based on data. Probability theory allows us to model and quantify uncertainty, which is inherent in real-world data and predictions. It enables machine learning algorithms to estimate and update probabilities, assess risks, and optimize decision-making processes. By leveraging probability, machine learning algorithms can handle complex data patterns, generalize from limited samples, and make reliable predictions in uncertain scenarios.

Great Learning offers a comprehensive and free course on Probability in Machine Learning, providing learners with a solid foundation in probability theory and its application to machine learning algorithms. The course covers key concepts such as random variables, probability distributions, Bayes’ theorem, conditional probability, and Markov chains. Participants will gain hands-on experience through practical exercises and real-world examples, learning how to apply probability in various machine learning tasks such as classification, regression, and clustering. With this course, learners can enhance their understanding of the probabilistic foundations of machine learning and strengthen their ability to build robust and accurate models.

There are various free online courses associated with Machine Learning offered by Great Learning. You can enroll for the courses for free where you’ll get a free certificate after course completion.

Conclusion:

Mastering probability concepts is indispensable for anyone working in the field of machine learning. A solid understanding of probability basics, probability distributions, conditional probability, Bayes’ theorem, Markov chains, random variables, expectation, and Monte Carlo methods empowers data scientists to build robust models, make informed decisions under uncertainty, and extract meaningful insights from data. By honing these essential concepts, practitioners can unlock the full potential of machine learning and drive innovation in diverse domains.